Understanding Software Bug Severity Levels: A 2025 Comprehensive Guide

by Yuliia Starostenko | March 10, 2015 10:00 am

Updated in September 2025.

Bug severity levels in software testing play a key role in the QA process. They help QA teams classify defects, understand how serious an issue is, and evaluate its impact on the software. If you’re looking for a clear explanation of bugs’ meaning in testing, this guide breaks it down through practical examples, classification rules, and their role in the overall QA process.

You’ll also learn the difference between severity and priority, and how to classify bugs correctly to improve triage, save time, and avoid costly mistakes.

What is Bug Severity and Why Does It Matter?

Bug severity measures a defect’s technical impact on the software’s core functionality. It reflects the damage a bug causes, ranging from complete system crashes to minor cosmetic issues. Properly assessing severity helps teams decide how urgently a bug should be fixed, optimizing resource allocation and speeding up release cycles.

Quick note: Severity doesn’t mean urgency. An extremely severe bug may need an immediate fix — that’s where priority comes in.

Severity vs Priority: What’s the Difference?

Understanding the difference between bug severity and priority is crucial for effective bug management and helps teams decide what to fix and when.

- Bug Severity describes how a defect interferes with the software’s core functionality. It reflects the issue’s seriousness from a technical perspective, regardless of its business urgency.

- Bug Priority refers to the order in which defects should be addressed, based on strategic factors like user needs, deadlines, or upcoming releases — not necessarily how critical the bug is.

The key differences between severity and priority are summarized in the table below:

Aspect | Bug Severity | Bug Priority |

|

Definition | How much does a bug impact software functionality | How soon should a bug be fixed |

|

Basis | Technical impact and disruption | Business impact, deadlines, and urgency |

|

Changeability | Usually consistent throughout the project | Often changes based on project phase and stakeholder input |

|

Assessment Owner | Typically assessed by QA/testers | Often decided by product owners or managers |

|

Example | Application crashes on launch | Minor typo on homepage before major release |

Example Case:

A payment system failure causing transaction loss is a critical-severity bug and usually has high priority. A typo in a rarely visited help page is a low-severity bug. Still, it might be a high priority if a product launch presentation depends on perfect user-facing content.

By clearly defining severity and priority, teams ensure bugs are correctly addressed, balancing technical impact with business goals — a practice especially relevant in Agile testing workflows[1] where priorities evolve rapidly.

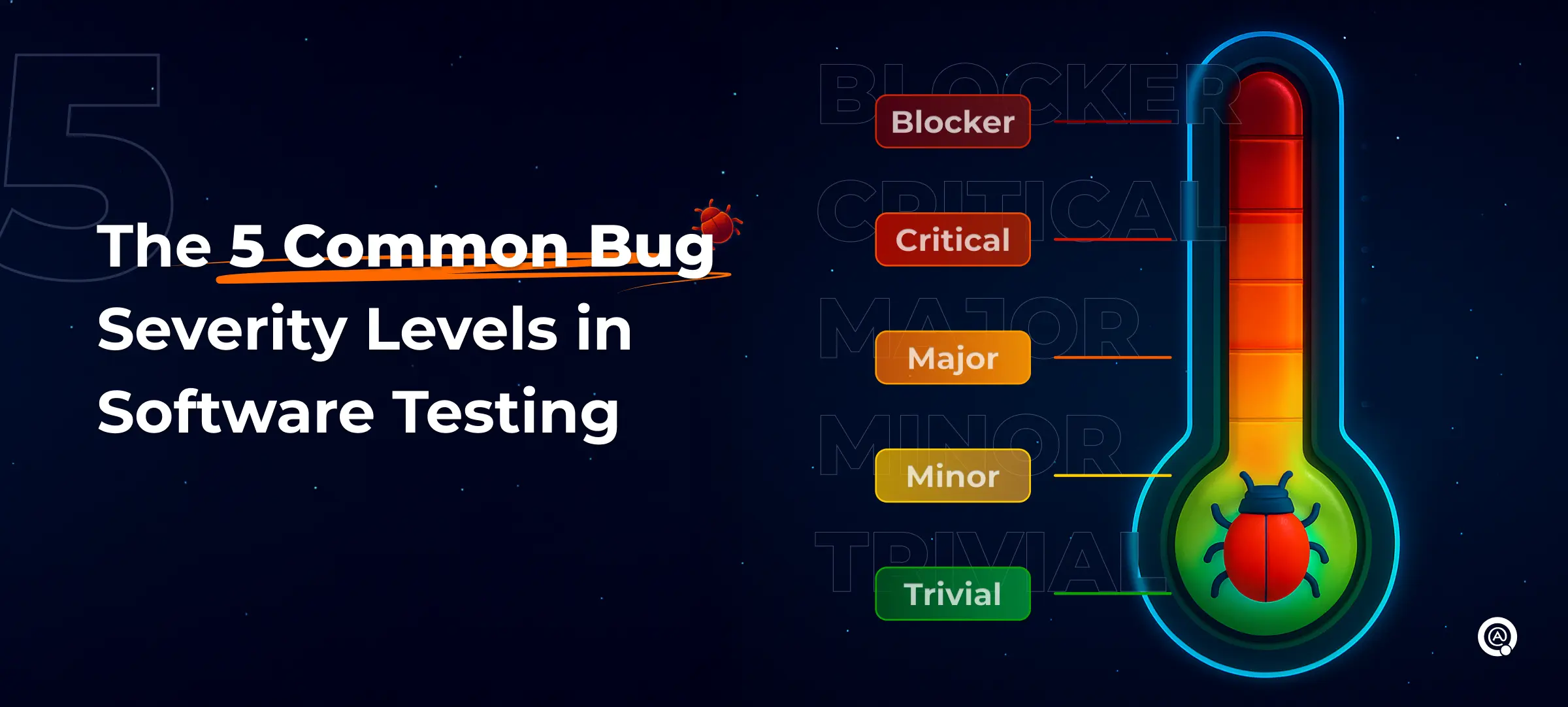

The 5 Common Bug Severity Levels in Software Testing

Bug severity levels help QA and development teams classify defects based on how seriously they affect the software’s performance or functionality. Below are the five most common severity levels used in modern software testing:

1. Blocker → Severity Level 1

↳ Definition: Completely prevents the use or testing of the system.

↳ Why it matters: These bugs block all progress and must be resolved immediately.

↳ Example: The app crashes on launch; login is impossible on any device.

2. Critical → Severity Level 2

↳ Definition: Severely impacts significant functionality or causes serious issues like data loss or security breaches.

↳ Why it matters: While partial use may be possible, these bugs pose high risks and require urgent fixes.

↳ Example: A broken payment system or exposure of confidential user data.

3. Major → Severity Level 3

↳ Definition: Disrupts key features, though the system remains operational primarily.

↳ Why it matters: These bugs affect user workflows or core tasks and must be addressed promptly.

↳ Example: The checkout button fails intermittently; there is untranslated text in a key user interface.

4. Minor → Severity Level 4

↳ Definition: Causes minor issues that do not interfere with core functionality.

↳ Why it matters: These bugs don’t block usage but may reduce user satisfaction or visual consistency.

↳ Example: Misaligned text on a form; incorrect tooltip label.

5. Trivial → Severity Level 5

↳ Definition: Cosmetic or stylistic issues with minimal or no functional impact.

↳ Why it matters: Typically scheduled for future releases or lower-priority sprints.

↳ Example: Slight button color mismatch; inconsistent font styling.

Such insights often emerge during functional testing[2], which evaluates how well features operate under real conditions.

How to Manage Bug Severity Effectively: Best Practices for QA Teams

Managing bug severity properly improves triage accuracy, speeds up releases, and aligns dev and QA teams. Here are key best practices:

- Classify bugs based on real user and system impact

Analyze how the defect affects functionality, data, and user flows — not just the visible result. This ensures severity reflects actual damage.

- Document bugs clearly and completely

Include key details like screenshots, environment info, and steps to reproduce — a foundational element in test documentation[3], which improves clarity between QA and development.

- Update severity as the project evolves

As deadlines shift or new features are added, revisit severity levels to ensure they reflect business and technical priorities.

- Align severity understanding across teams

Ensure testers, developers, and product stakeholders use the same criteria to avoid confusion during triage and planning.

Modern Considerations in 2025

In 2025, evaluating bug severity goes beyond just technical failure. Evolving platforms, user expectations, and security demands have changed how QA teams approach severity levels. Here’s what’s different today:

1. Security issues = Critical by default

Despite functional impact, vulnerabilities are treated as high severity due to data and compliance risks.

2. UX flaws can be high-severity

Poor usability, broken flows, or accessibility issues may affect user retention and perception.

3. One bug, multiple platforms

In cloud and cross-platform apps, a single defect can impact several environments, increasing severity.

4. Automation shifts detection earlier

Modern tools catch severe bugs earlier in the pipeline, improving severity accuracy during triage.

5. AI-generated bugs need new rules

Unpredictable AI behavior (e.g., biased output, hallucinations) can harm users in subtle but profound ways — often requiring higher severity than expected.

Final Thoughts

Precise severity classification is essential for effective bug triage, smart prioritization, and faster, more reliable releases.

By understanding the five severity levels, distinguishing severity from priority, and following proven QA practices, teams can fix the correct bugs at the right time — improving product quality and user satisfaction.

Need help classifying bug severity levels or optimizing QA processes?

Contact QATestLab[4] to set up a severity-driven testing workflow that ensures product quality.

Learn more from QATestLab

Related Posts:

- Agile testing workflows: https://go.qatestlab.com/470RIAy

- functional testing: https://qatestlab.com/services/manual-testing/functional-testing/

- test documentation: https://qatestlab.com/

- Contact QATestLab: https://go.qatestlab.com/4mxit5g

- How bugs impact your business. With real-life examples: https://blog.qatestlab.com/2019/09/05/bugs-impact-business/

- Assessing Bug Priority and Severity: https://blog.qatestlab.com/2011/07/03/assessing-bug-priority-and-severity/

Source URL: https://blog.qatestlab.com/2015/03/10/software-bugs-severity-levels/