- QATestLab Blog >

- QA for Business >

- Industries Insights >

- Testing the Brains of Tomorrow’s Tech – AI and ML

Testing the Brains of Tomorrow’s Tech – AI and ML

The revolution of Artificial Intelligence (AI) and Machine Learning (ML) has accelerated the tech industry’s growth. By 2032, the AI marketing industry will grow to a massive $22 billion from just $1.9 billion in 2022. This surge is a testament to the transformative capabilities of AI and ML. They are not only changing how we live, work, and interact, but they’re also disrupting traditional industry norms and creating new opportunities for businesses worldwide. This exponential growth is fueled by numerous advancements and applications in a broad spectrum of sectors.

From autonomous vehicles navigating our city streets to personalized shopping experiences powered by sophisticated recommendation algorithms, AI and ML have become the driving force behind many of the cutting-edge technologies we use today. They are integral in all spheres, starting from industries like healthcare, where ML algorithms assist in disease detection and drug discovery, and ending with finance, where AI is revolutionizing how we manage and invest money.

However, with such rapid growth and high stakes, the need for AI and ML-based application testing has never been more crucial. The effectiveness of these technologies is only as good as the testing behind them. Therefore, this article is your all-in-one guide to understanding the complexities of testing AI and ML applications.

AI vs ML: Knowing the Difference

Navigating through the intricate terrain of technology, we often stumble upon terms like Artificial Intelligence (AI) and Machine Learning (ML). Although they often cross paths and share connections, they aren’t identical twins in the tech family. Recognizing the nuanced differences between them helps understand their respective strengths and boundaries.

Consider Artificial Intelligence (AI) as the tech universe, an expansive concept that encapsulates any computerized program exhibiting traits we typically associate with human intelligence. AI’s ambitious aspiration is to construct machines capable of executing any intellectual task a human can perform – from interpreting nuanced language to discerning intricate patterns. We can categorize AI into two main compartments: Narrow AI, which is engineered to carry out a specific task such as voice recognition, and General AI, an advanced form that, in theory, could tackle any intellectual task a human can.

Conversely, we have Machine Learning (ML), which is more like a region within the vast AI universe. ML represents a distinct approach to realizing Artificial Intelligence. It’s a method that empowers machines to learn from experiences and refine their performance over time, mirroring the human ability to learn and evolve. ML algorithms leverage data to adapt and improve, making them valuable tools in predictive analysis, personalization, and many other applications.

In summary, while all Machine Learning is AI, not all AI is Machine Learning. AI is the grand dream of imitating human intelligence, while ML is the more practical, application-driven tool that’s turning that dream into reality.

By acknowledging the fine distinctions between AI and ML, we can better understand their unique potentials, strategic applications, and the symbiotic relationship between them.

AI and ML Testing: Understanding Challenges

Testing AI and ML systems is like journeying through a maze. It is a complex task with unique challenges differing significantly from traditional software testing. These applications learn and adapt continually, requiring innovative approaches to testing that consider the following hurdles:

- Unpredictable outcomes. AI and ML applications continually learn and adapt to new data, leading to unpredictability in outcomes. This makes it challenging to validate results as there’s no predefined ‘correct’ answer to compare with.

- Overfitting and underfitting. Balancing bias and variance in models is crucial but challenging. Overfitting (high variance) happens when the model is too complex and doesn’t generalize well while underfitting (high bias) occurs when the model is too simple to capture trends.

- Quality over quantity. AI and ML applications’ performance is directly tied to the data quality they’re trained on. Poor quality or biased data can significantly affect model performance and fairness.

- Continuous monitoring. Owing to their dynamic nature, AI and ML systems require ongoing surveillance. They are evolving beings that demand continuous monitoring and maintenance, leading to substantial investment in time and resources.

Addressing these challenges is vital in harnessing the full potential of AI and ML systems while ensuring they operate effectively, accurately, and fairly. As we evolve our understanding and mastery of these technologies, we’ll also continue to refine and improve our approaches to testing them.

A Guided Path to Testing AI Applications: A Step-by-Step Approach and Essential Tools

Harnessing the benefits of AI and ML applications demands rigorous and meticulous testing. Given the sophisticated nature of these technologies, the approach significantly varies from traditional software testing. Let’s journey through a comprehensive, systematic approach to testing AI applications and explore some handy tools to aid this process.

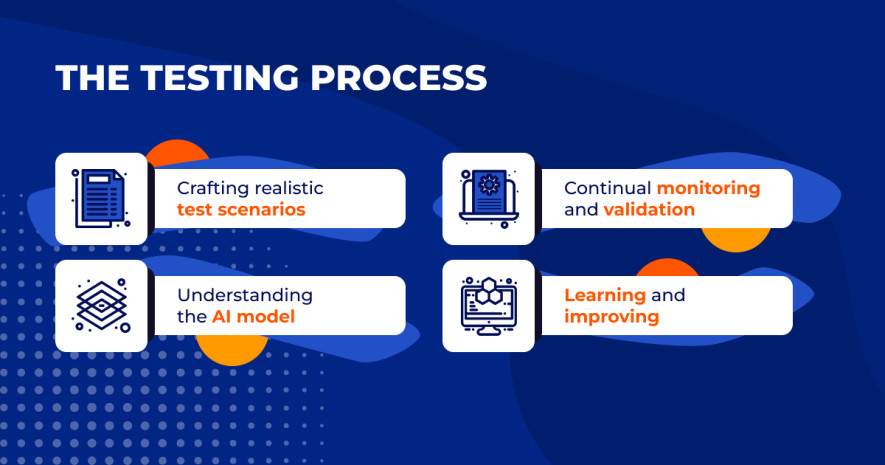

The Testing Process

Testing AI applications is not a one-size-fits-all task. Instead, it requires understanding the underlying AI model, careful planning, and constant monitoring. Let’s break it down into key steps:

- Understanding the AI model. The first and foremost step in testing AI applications is to understand the AI model thoroughly. This requires delving into the model’s design, its inputs and outputs, and the logic it utilizes. A clear grasp of these factors can shed light on potential vulnerabilities and provide valuable insights into how the model may perform under different conditions.

- Crafting realistic test scenarios. Testing AI applications is akin to creating a diverse and comprehensive rehearsal for a performance. Defining a wide array of realistic scenarios is crucial to ensure the application is well-prepared for many situations. The more varied the conditions and inputs, the more robust the application.

- Continual monitoring and validation. Testing AI applications is not a one-off event; it’s an ongoing marathon. Constant monitoring and validation of outputs is vital in assessing their accuracy, reliability, and consistency. Regular checks can reveal if the model needs adjustments or training with different data.

- Learning and improving. Just as the AI models learn and improve, so should our approach to testing. With the iterative nature of AI, not only one round of testing is needed. As the model evolves, so should the testing process, re-evaluating the model’s performance and refining the testing strategy as needed.

Tools of the Trade

In the diverse tech landscape, we have a wealth of tools that can assist in testing AI and ML applications. Let’s explore some front-runners:

TensorFlow, an open-source platform, is a treasure trove of libraries and resources for building and training ML models. It also offers testing tools that provide valuable performance and accuracy metrics.

Lime is a Python library that acts as an interpreter for ML models. It offers insights into the decision-making processes of your models, a crucial factor in refining and validating AI/ML models.

Crafted by Facebook’s AI team, Robustness Gym is designed to test AI models’ durability. It helps reveal a model’s limitations and measures performance under different scenarios.

The What-If is a visualization tool by Google that helps illustrate the behavior of ML models. It’s particularly useful in understanding the impact of different features on the model’s predictions.

IBM’s contribution to the field is AI Fairness 360, an open-source library that aids in detecting and mitigating bias in ML models. It provides metrics to test the fairness of models, a critical aspect of any AI/ML system.

Utilizing these tools with a robust testing strategy can ensure AI and ML applications’ accuracy, reliability, fairness, and robustness.

Testing AI and ML applications is a complex yet crucial task. Understanding the unique challenges and pivotal factors in effective testing becomes increasingly important as these technologies continue to revolutionize our world. This knowledge ensures the reliability, accuracy, and fairness of AI and ML applications and helps pave the way for their future advancements.

Embracing the Future with AI and ML Testing

We continue to push the boundaries of what’s possible with AI and ML, and we are likely to see even more impressive statistics by 2032. With every industry vying to incorporate these technologies, the future of AI and ML promises to be exciting and transformative, heralding a new era of innovation and growth.

As AI and ML applications continue to be integral to our digital lives, adequate testing of these technologies is essential. The future of software testing lies in developing innovative approaches and tools specifically designed for AI and ML applications.

At QATestLab, we are more than ready to dive into testing your AI-based software. Our team has acquired substantial experience in testing advanced AI and ML applications. We understand the nuances and complexities involved, and we can effectively ensure the smooth operation of your AI systems. Considering implementing AI in your software? Let’s discuss your testing strategies. Together, we can guarantee your AI and ML technologies perform optimally.

Learn more from QATestLab

Related Posts:

- QA for AI: Why to Test Already Smart Artificial Intelligence?

- Machine Learning: explanation and its role for Automated Testing

- Testing self-driving cars & Machine Learning Models

About Article Author

view more articles

No Comments Yet!

You can be the one to start a conversation.