- QATestLab Blog >

- QA Trends >

- QA for AI: Why to Test Already Smart Artificial Intelligence?

QA for AI: Why to Test Already Smart Artificial Intelligence?

What if AI will act wrong? Or, the most paranoiac question: what if the AI takeover will happen? To eliminate the risks of all kinds, testing and quality assurance services is the best cure. But while there is much information on the technology of AI, the topic of testing remains to be less talked about. To change a situation like this let’s dive into an in-depth investigation from QATestLab on what QA is for AI.

Why Does AI Require a Different Testing Approach?

The concept of a machine performing human-like tasks emerged nearly in the 1950s after Alan Turing explored the mathematical possibility of Artificial Intelligence (AI). Today, in

2020, this concept became a reality. More and more products are being developed based on AI and Machine Learning (ML) algorithms: the market grew from $28.42 billion in 2019 to $40.74 billion in 2020.

What makes the AI-powered system so special? It is a combination of usual software and machine learning opportunities. The whole secret of ML is on its self-learning, analyzing information, and outputting each time new results. In other words, it replicates human intelligence. The process of developing and testing such a product is much more complicated than any other software.

Compared with traditional software testing, ensuring quality for AI systems requires a diametrically different approach. It is impossible to put machine learning models into production, cause they are always changing. There are no strict rules, predefined testing techniques, and a particular methodology. No one knows what to expect from an AI system. That’s why there are so many concerns and ‘negative and skeptical’ scenarios connected with AI.

Strategy to Test Artificial Intelligence

For some, this may break the pattern, but artificial intelligence algorithms are being tested at the same time as they are being developed. This work accordingly is most often performed by data scientists or ML engineers. For this, three components are needed:

- Training dataset — data used to train the AI model

- Development dataset — also called a validation dataset, this is used by developers to check the system’s performance once it learns from the training dataset

- Testing dataset — used to evaluate the system’s performance

And here is the most complicated yet exciting part. In the process of development, the AI algorithm continually goes through a phase of validation and training. According to the results, AI developers add or remove something from the program for the algorithm to make it work as expected.

But how? Of course, they don’t merely develop an AI algorithm, throw training data at it and call it a day. They have to verify that the training data does a good enough job of accurately classifying or regressing data with sufficient generalization without overfitting or underfitting the data. If it gets wrong, they go back to change the hyperparameters and rebuild the model.

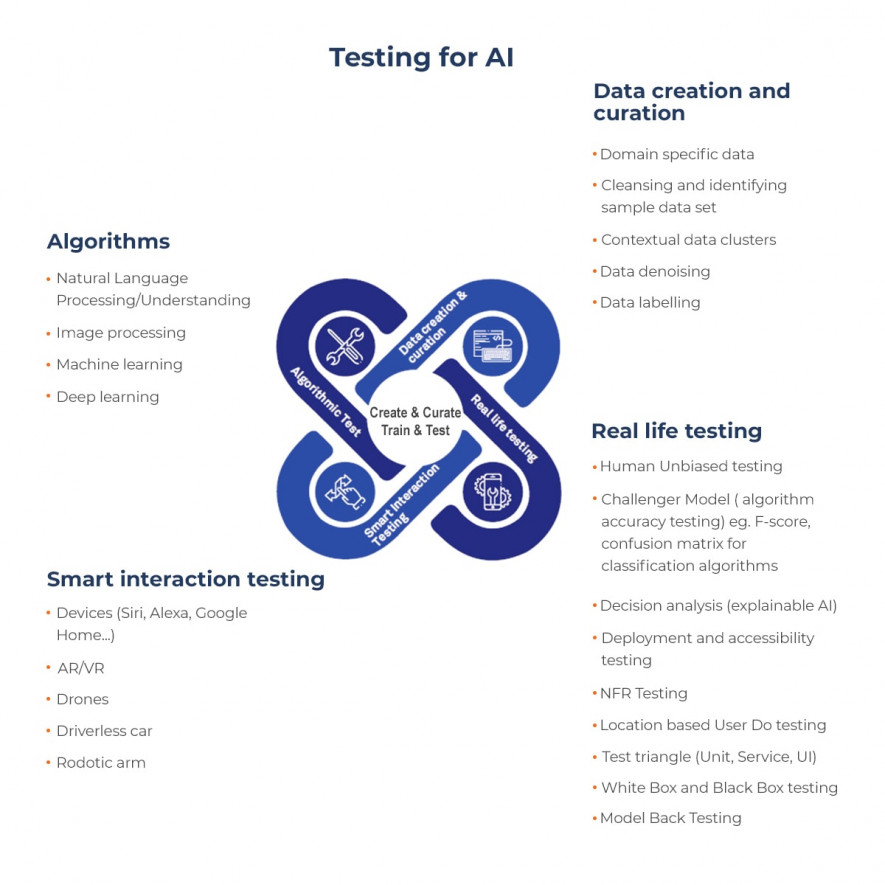

To understand the full picture of testing for AI here are the key elements for it:

As you can see, testing and building of the machine learning model are happening simultaneously. But each case will be different since there are will be different data sets, tasks, and machine learning algorithms for them correspondingly.

As for the testing itself, some methodologies from standard testing penetrate the field of artificial intelligence too. For example, some of the techniques, like model performance testing and metamorphic testing, are used to perform Black Box testing. Plus, the standard approaches for performance and security testing will not be superficial.

What Types of AI Can Be Tested?

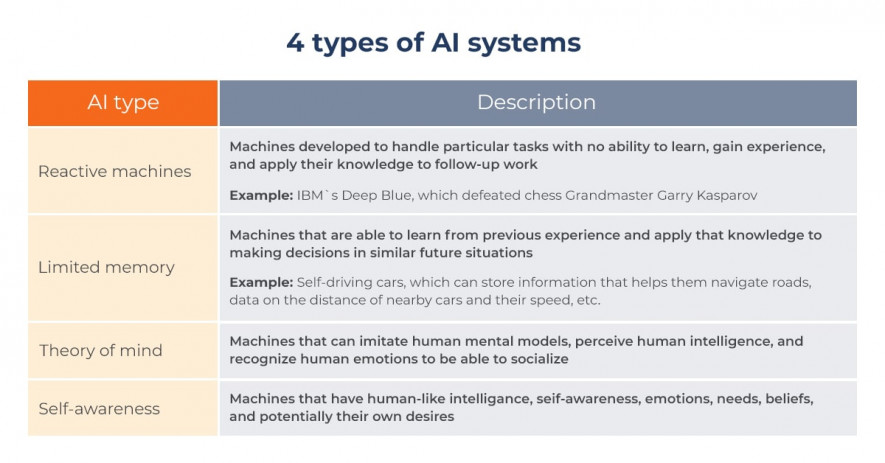

Since AI is rather a broad concept or an umbrella holding variety of technologies, testing it is a no less broad realm. All-in-all, there are four key types of AI, and each of them will require a particular way of developing and testing as well. Let’s take a closer look at these types:

The simplest type of all systems based on AI is reactive machines. They are essential in that they do not store ‘memories’ or use past experiences to determine future actions. For this type of AI, it is needed to conduct Model-Based Testing.

Another type is limited memory AI. The most popular application of it is self-driving cars. For this type, it is necessary to conduct. By the way, recently, we have posted an article on testing self-driving vehicles. Two other types – theory of mind and self-awareness – the most complex ones. There is no single test for testing such species, but there are already some approaches.

Final Word: What Are the Problems of Testing AI

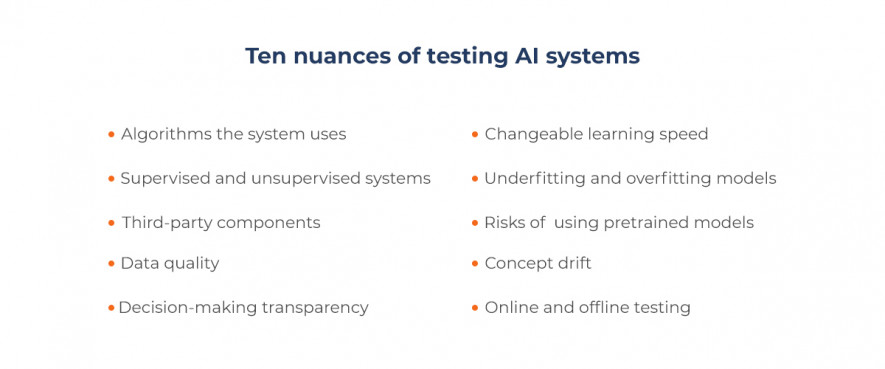

QA for AI is a new yet critical issue that is only picking up traction. AI systems still can go wrong; therefore, the final value of testing is providing security and safety for humanity. Why do we still have no precise picture of testing AI systems? Obtaining training data sets that are sufficiently large and comprehensive enough to meet ‘ML testing’ needs is a significant challenge. Among other problems are:

All this means only one: it is almost impossible to do QA for AI projects like doing it for other projects. The concept of what, how, and when is being tested, significantly differs from traditional projects. That’s why testing AI should be conducted exceptionally by experts with vast knowledge and understanding of not only the QA realm but also the domain of AI.

……………………………………..

Keep up with the latest trends in QA: visit our blog, follow us on Facebook and Linkedin.

Don’t hesitate to contact us, if you are required testing.

Let’s grow together!

Learn more from QATestLab

Related Posts:

About Article Author

view more articles

has more than 2-year experience in blogging and copywriting, copyediting and proofreading of web content.

View More Articles

No Comments Yet!

You can be the one to start a conversation.